Not only is the online encyclopedia Wikipedia an indispensable platform for open knowledge on the Internet, it is also a prime example of how people and algorithms work together hand in hand. After all, numerous bots assist the site’s volunteer editors while also keeping “vandals” at bay.

It’s mid-October, early afternoon in the United States, and many students have just come home from school. That means it’s rush hour for one of the most dedicated Wikipedia contributors: ClueBot NG, who is functioning as a gatekeeper. An unknown user enters the birthday of his 17-year-old girlfriend in the list of historic events that have taken place on April 27. ClueBot NG deletes the entry and sends the user a warning. Another author using the pseudonym Cct04 posts a full-throated complaint – “I DON’T KNOW WHAT THIS IS” – in the middle of an article about a government program that provides affordable housing. ClueBot NG deletes this vociferous comment. But this time he does more than just issue a warning. This is the third time this particular author has committed an act of vandalism, so ClueBot NG flags the user as a potential vandal. An administrator will now block Cct07 from contributing to the site.

Untiring fight against vandalism

ClueBot NG is not a human author, but a program that runs on Wikimedia Foundation’s servers – a so-called bot. That means something has long been reality at Wikipedia which is still only being discussed by the rest of the world: automated “helpers” are deciding largely for themselves what humans can and cannot do. Who, for example, is allowed to add to the crowdsourced encyclopedia and who may not? What is appropriate content for an encyclopedia and what is not? ClueBot NG is one of 350 bots approved for use in the English-language version of Wikipedia. One in 10 of the 528 million editorial changes that have been made to the platform was carried out by a bot. There are also numerous semi-automated tools that make it possible for human editors to decide, with just a few clicks, how to deal with multiple entries. Without algorithmic decision-making, Wikipedia would look much different than it does today.

ClueBot NG is one of the site’s most active bots. On this October afternoon, the program will act up to 12 times per minute to fight vandals, warn trolls and thwart bored youngsters.

Spam filter for the world’s knowledge

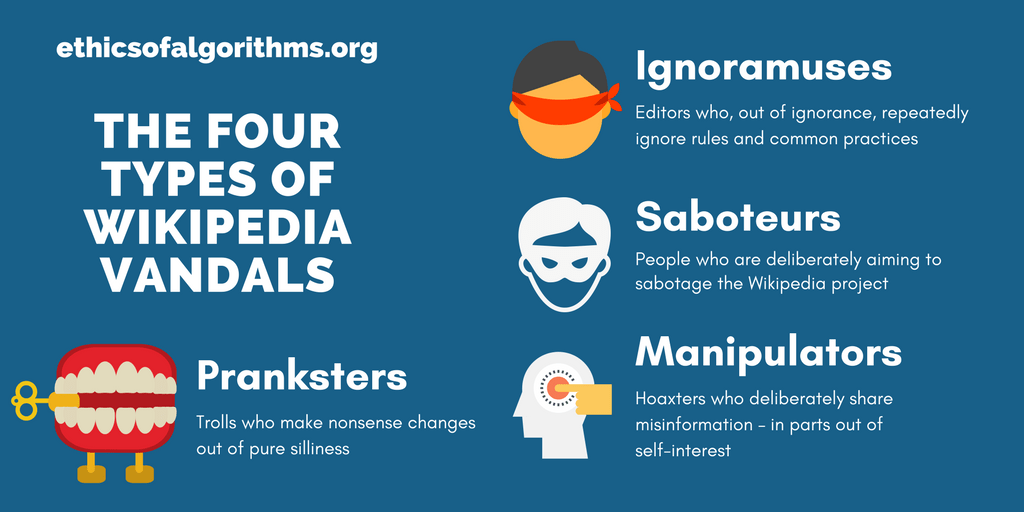

Without ClueBot NG’s ceaseless efforts, human authors would have to remove unwanted entries from the online encyclopedia by hand. Vandalism is defined as any change that is malicious and not intended to contribute to Wikipedia in a serious way. This includes everything from deleting entries and trolling to meticulously plotted manipulations designed to influence a company’s stock price. All too often authors find an article has been augmented with a picture of a penis and anobscene comment. Other additions are not considered vandalism: well-intentioned but poorly executed entries, for example, or when someone raises an issue about an article’s content – unless the author has already been contacted and continues to ignore the stated rules.

Yet bots can only intervene in the most obvious cases. Since the programs do not understand an article’s context and cannot check the content behind an inserted link, their ability to combat vandalism is limited. A human reader must look and decide if information added to a text is truly appropriate.

At the same time, bots have become largely indispensible. A study by R. Stuart Geiger and Aaron Halfaker showed: When ClueBot NG is out of commission, content that is clearly vandalism is still deleted, but it takes twice as long and requires considerable human effort, time Wikipedians would rather spend writing articles.

Even algorithms make mistakes

ClueBot NG is guided by an artificial neuronal network. Its self-learning algorithms work the way a spam filter in an e-mail program does. ClueBot NG is constantly fed new data about which changes human Wikipedia authors consider vandalism and which are acceptable as content for the site’s articles. The neuronal network then looks for similarities in the texts it examines, thereby generating rules for filtering content. The program uses those rules to calculate a value for every new Wikipedia entry, signaling the degree to which an edit corresponds to the known patterns of vandalism. Other parameters are also considered, such as a user’s past contributions. If the value exceeds a certain threshold, the bot goes to work.

Naturally, the program is not perfect. Immediately after Cct04 was blocked, ClueBot NG also deleted an addition by a new user named Ariana, who noted that the actor Ellie Kemper was voicing a role in the children’s television series Sofia the First. It was true – but it was also something that ClueBot NG could not have known.

All that matters to the computer program is that a new user has made a change which looks like vandalism: It’s brief, contains a lot of names and is not “wikified” – i.e. does not conform to the conventions used for Wikipedia texts. The bot always leaves a detailed technical explanation and link on the user page which allows authors to report an incorrect deletion. But Ariana does not respond and does not add anything to the site over the next few days. It seems that Wikipedia has lost a new contributor.

Wikipedia is exposed to regular vandalism attacs. It isn’t easy to distinguish the well-intentioned but inexperienced users from those, that consciously ignore rules or even aim to spread disinformation.

One of the open Internet’s main pillars

“In 2003, the English-language Wikipedia community was still of the opinion that it would be better not to use bots. Today, however, bots are an everyday tool at Wikipedia,” says Claudia Müller-Birn, a professor at Freie Universität Berlin who researches human-computer collaboration and has carried out several studies on algorithms used by Wikipedia.

Since Wikipedia’s anarchic beginnings in 2001, the task of putting together a reliable encyclopedia has become increasingly complicated. On the one hand, the number of articles has grown, with the English version containing 5.5 million entries as of October 2017 and the German version 2.1 million. That means routine activities like fixing incorrect links or sorting lists can no longer be done by hand. On the other, the required tasks have become more complex. It is not so easy, for example, to add an accurate reference list to an article, and even creating info boxes requires a thorough introduction to Wikipedia’s guidelines.

As it has grown, Wikipedia has gained visibility. For instance, Google now regularly includes Wikipedia content in its search results, which means incorrect information – the news that someone who is still alive has died, for example – can spread within minutes if it is not immediately deleted from the site. This is where Wikipedia’s good reputation collides with its open design.

Not just one more social network

In addition, the Wikipedia community has to deploy its contributors wisely: While there were more than 50,000 Wikipedia authors at work on the English site in 2007, today there are only about 30,000. Helpers such as bots are not only meant to reduce the authors’ workload, they can also prevent human contributors from becoming frustrated. After all, anyone who has spent hours researching their hometown or a distant galaxy in order to create an encyclopedia-worthy article does not want the information they have provided ruined by nonsensical additions.

In the early years of Wikipedia it seemed like human manpower was available almost endlessly. But while Wikipedia is continously growing, the number of contributors in stagnating.

The key point here is that Wikipedia isn’t interested in becoming just one more social platform. The site does not want readers to have to decide for themselves which entries they can trust. Incorrect information doesn’t just fade from sight as it would on a timeline, where entries gradually disappear into the past. Thus, Wikipedians do their best to ensure a consensus exists on what is true and correct.

Wikipedians don’t view the site as a finished encyclopedia , but as a “project for creating an encyclopedia” – a project that is never supposed to be complete. In order to run a project that is never supposed to end, you need tenacious, untiring workers. ClueBot NG is one of them.

Wikipedians have learned to shape the social impact of algorithms in a positive way – for example by introducing registration procedures and rules for robots. More on that in Part 2 of our series. Subscribe to our RSS feed or e-mail newsletter to find out when new posts are added to this blog.

Write a comment