Algorithmic systems evaluate people – which poses risks – for us as individuals, for groups and for society as a whole. It is therefore important that algorithmic processes be auditable. Can the EU’s General Data Protection Regulation (GDPR) help foster this kind of oversight and protect us from the risks inherent to algorithmic decision-making? Answers to these questions and more are provided by Wolfgang Schulz and Stephan Dreyer in an analysis commissioned by the Bertelsmann Stiftung.

In algorithmic processes, the processing of data itself poses less of a risk to users than the decisions made as a result of this. They can have an impact not only on us as individuals in terms of our autonomy and personal rights but also on groups of people in terms of discrimination. So far, however, the use of algorithms has continued largely without any form of social control. Against this background, Wolfgang Schulz and Stephan Dreyer explore the extent to which the GDPR, which went into effect in May 2018, supports oversight of algorithmic decision-making and thus protects us from the risks involved.

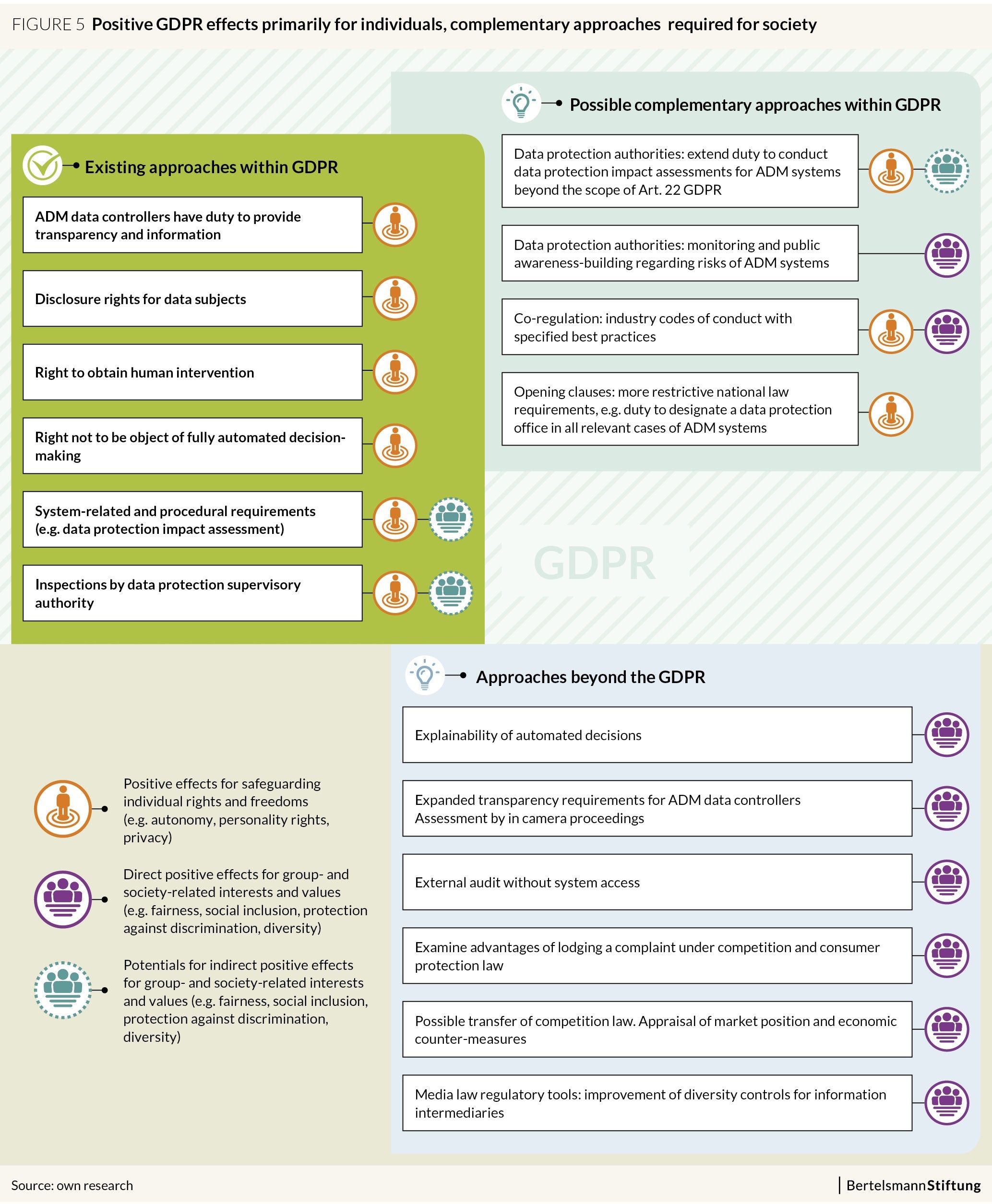

Their analysis shows that the EU regulation has limited impactfor two reasons. First, the GDPR’s scope of application is narrow. It prohibits only those decisions that are fully automated and have an immediate legal impact or other significant effects. It still allows systems that prepare human-made decisions and recommendations. Fully automated decisions are those decisions made without any human involvement – for example, when a software sorts out applicants before a human recruiter has even looked at his or her documents. However, if a human being makes a final decision and draws on the support of an algorithmic system – for example in granting credit – the GDPR does not apply. And there are also exceptions to the ban – when the person concerned gives their consent, for example. Ultimately, this leads to algorithmic decision-making becoming commonplace in our everyday lives.

GDPR does not protect against all risks associated with algorithmic decision-making

Second, the provisions of the GDPR strengthen to some extent the information rights of individual users and, as a result of stricter documentation obligations, foster greater awareness of data subjects’ rights among those responsible for data processing. The regulation, however, does not protect against socially relevant risks to principles such as fairness, non-discrimination and social inclusion, which extend beyond the data protection rights of an individual per se. The GDPR’s transparency requirements do not have the scope to address systematic errors and the discrimination of entire groups of people.

Additional measures are therefore needed to improve the auditability of algorithmic systems. One such measure involves data protection authorities drawing attention to – and thereby raising public awareness of – the social risks inherent to algorithmic decision-making systems through the audits they conduct within the framework of the GDPR. Another option involves steps taken outside the framework of the GDPR that can foster oversight. External third parties may review algorithmic decision-making systems or class-action suits that are pursued under consumer protection laws.

A PDF of the study “What Are the Benefits of the General Data Protection Regulation for Automated Decision-Making Systems” with a cover page (not under a Creative Commons license) is available here.

If you wish to re-use or distribute the working paper, a Creative Commons-licensed version without the cover page is available here.

This text is licensed under a Creative Commons Attribution 4.0 International License

Write a comment