We try to condense the 90+ pages of our paper “Digitale Öffentlichkeit” (full version in German: DOI 10.11586/2017028) to a short summary below that answers three key questions:

- Media transformation: How is public discourse changing because of the new digital platforms through which many people now receive socially relevant information?

- Social consequences: In terms of quality and diversity, is the information that reaches people via these new channels suitable for the formation of basic democratic attitudes and does it promote participation?

- Solutions: How can the new digital platforms be designed to ensure they promote participation?

1. Media transformation: Intermediaries are a relevant but not determining factor for the formation of public opinion.

When all age groups are considered, intermediaries driven by algorithmic processes, such as Google and Facebook, have had neither a large nor defining influence on how public opinion is formed, compared to editorially driven media such as television. These intermediaries judge the relevance of content based on the public’s immediate reaction to a much greater degree than traditional media do.

Numerous studies have shown that so-called intermediaries such as Google and Facebook play a role in the formation of public opinion in countries including Germany. For example, 57 percent of German Internet users receive politically and socially relevant information via search machines or social networks. And although the share of users who say that social networks are their most important news source is still relatively small at 6 percent of all Internet users, this figure is significantly higher among younger users. It can thus be assumed that these types of platforms will generally increase in importance. The creation of public opinion is “no longer conceivable without intermediaries,” as researchers at the Hamburg-based Hans Bredow Institute put it in 2016.

The principles that these intermediaries use for shaping content are leading to a structural shift in public opinion and public life. Key aspects are:

- Decoupling of publication and reach: Anyone can make information public, but not everyone finds an audience. Attention is only gained through the interaction of people and algorithmic decision-making (ADM) processes.

- Detachment from publications: Each item or article has its own reach. Inclusion in a publication reduces the chances of becoming part of the content provided by intermediaries.

- Personalization: Users receive more information about their specific areas of interest.

- Increased influence of public on reach: User reactions influence ADM processes in general and the reach of each item or article.

- Centralization of selection agents: Intermediaries offer much less diversity than do traditionally curated media.

- Interplay of human and machine-based curation: Traditionally curated media disseminate content via intermediaries and use the resulting reactions on intermediary sites as a measure of public interest.

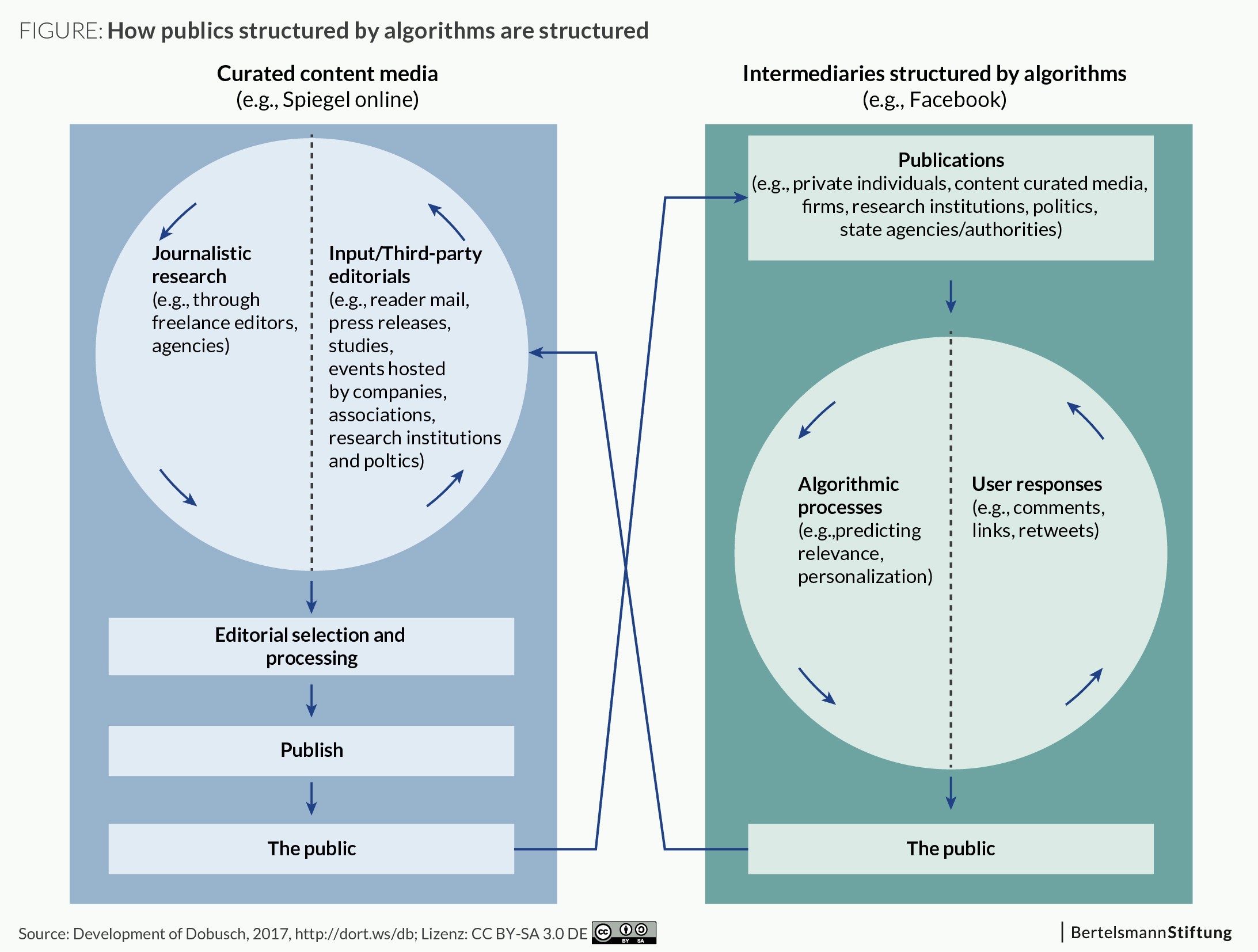

The comparison of processes in the graphic below show the key role now being played by user reactions and algorithmic processes in public communication and the formation of public opinion. The user reactions and algorithmic processes determine the attention received via intermediaries. Our hypothesis is that these reactions and processes cannot be definitively included in a linear causal chain.

Google, Facebook and other intermediaries are already playing an important role in the public discourse, even though as a rule these platforms were not originally conceived to supply consumers with media content from journalistic organizations. They use technical systems instead to decide whether certain content taken from a huge pool of information could be interesting or relevant to a specific user. These systems were initially intended – in the case of search engines – to identify websites, for example, that contain certain information or – in the case of social networks – to display in a prominent position particularly interesting messages or photos from a user’s own circle of friends. In many cases they therefore sort content according to completely different criteria than editors at a daily newspaper or a magazine would. “Relevant” means something different to Google than it does to Facebook, and both understand it differently than the editors at SPIEGEL ONLINE or Sueddeutsche.de.

The intermediaries use numerous variables to calculate the relevance of individual items. These variables range from basic behavioral metrics such as scrolling speed or how long a page is viewed to the level of interaction among multiple users in a social network. When someone with whom a user has repeatedly communicated on Facebook posts content, the probability is higher that the user will be shown this content than they would if someone posts with whom the user has, theoretically, a digital connection, but with whom the user has never truly had contact. The inputs that other users provide – often unknowingly – are also included in assessments of relevance, whether they be links, clicks on links, clicks on the “like” button, forwards, shares or the number of comments that certain content receives.

2. Social consequences: As used by the most relevant intermediaries currently forming public opinion, algorithmic processes evaluate user reactions to content. They promote and reinforce a manner of human cognition that is susceptible to distortion, and they themselves are susceptible to technical manipulation.

Use of these intermediaries for forming public opinion is leading to a structural change in public life. Key factors here are the algorithmic processes used as a basic formational tool and the leading role that user reactions play as input for these processes. Since they are the result of numerous psychological factors, these digitally assessed and, above all, impulsive public reactions are poorly suited to determining relevance in terms of traditional social values. These values, such as truth, diversity and social integration, have served in Germany until now as the basis for public opinion and public life as formed by editorial media.

The metrics signaling relevance – about which platform operators are hesitant to provide details because of competition-related factors or other reasons – are potentially problematic. This is true, first, because the operators themselves are constantly changing them. Systems such as those used by Google and Facebook are being altered on an ongoing basis; the operators experiment with and tweak almost every aspect of the user interface and other platform features in order to achieve specific goals such as increased interactivity. Each of these changes can potentially impact the metrics that the platforms themselves capture to measure relevance.

A good example is the “People you may know” feature used by Facebook which provides users with suggestions for additional contacts based on assessments of possible acquaintances within the network. When this function was introduced, the number of links made every day within the Facebook community immediately doubled. The network of relationships displayed on such platforms is thus dependent on the products and services that the operators offer. At the same time, the networks of acquaintances thus captured also become variables in the metrics determining what is relevant. Whoever makes additional “friends” is thus possibly shown different content.

A further problem of the metrics collected by the platform operators has to do with the type of interaction for which such platforms are optimized. A key design maxim is that interactions should be as simple and convenient as possible in order to maximize the probability of their taking place. Clicking on a “like” button or a link demands no cognitive effort whatsoever, and many users are evidently happy to indulge this lack of effort. Empiric studies suggest, for example, that many articles in social networks forwarded with a click to the user’s circle of friends could not possibly have been read. Users thus disseminate media content after having seen only the headline and introduction. To some extent they deceive the algorithm and, with it, their “friends and followers” into believing that they have read the text.

The ease of interaction also promotes cognitive distortions that have been known to social psychologists for years. A prime example is the availability heuristic: If an event or memory can easily be recalled, it is assumed to be particularly probable or common. The consequence is that users frequently encounter unread media content that has been forwarded due to a headline, and the content is later remembered as being “true” or “likely.” This is also the case when the text itself makes it clear that the headline is a grotesque exaggeration or simply misleading.

A number of other psychological factors also play an important role here, for example the fact that people use social media in particular not only for informational purposes, but also as a tool for identity management, with some media content being forwarded only to demonstrate the user’s affiliation with a certain political camp, for example. Moreover, the design of many digital platforms explicitly and intentionally encourages a fleeting, emotional interaction with content. Studies of networking platforms indeed show that content which rouses emotion is commented on and forwarded particularly often – above all when negative emotions are involved.

Such an emotional treatment of news content can lead to increased societal polarization, a hypothesis for which initial empirical evidence already exists, especially in the United States. At the same time, however, such polarizing effects seem to be dependent on a number of other factors such as a country’s electoral system. Societies with first-past-the-post systems such as the US are potentially more liable to extreme political polarization than those with proportional systems, in which ruling coalitions change and institutionalized multiparty structures tend to balance out competing interests. Existing societal polarization presumably influences and is influenced by the algorithmic sorting of media content. For example, one study shows that Facebook users who believe in conspiracy theories tend over time to turn to the community of conspiracy theorists holding the same view. This process is possibly exacerbated by algorithms that present them more and more often with the relevant content. These systems could in fact result in the creation of so-called echo chambers, at least among people with extremist views.

Technical manipulation can also influence the metrics that intermediaries use to ascertain relevance. So-called bots – partially self-acting software applications that can be disguised as real users, for example in social networks – can massively distort the volume of digital communication occurring around certain topics. According to one study, during the recent presidential election in the US, 400,000 such bots were in use on Twitter, accounting for about one-fifth of the entire discussion of the candidates’ TV debates. It is not clear to which extent these types of automated systems actually influence how people vote. What is clear is that the responses they produce – clicks, likes, shares – are included in the relevance assessments produced by ADM systems. Bots can thus make an article seem so interesting that an algorithm will then present it to human users.

In sum, it can be said that the relevance assessments that algorithmic systems create for media content do not necessarily reflect criteria that are desirable from a societal perspective. Basic values such as truthfulness or social integration do not play a role. The main goal is to increase the probability of an interaction and the time users spend visiting the relevant platform. Interested parties whose goal is a strategic dissemination of disinformation can use these mechanisms to further their cause: A creative, targeted lie can, on balance, prove more emotionally “inspiring” and more successful within such systems – and thus have a greater reach – than the boring truth.

3. Solutions: Algorithmic sorting of content is at the heart of the complex interdependencies affecting public life in the digital context. This is where solutions must be applied.

Algorithms that sort content and personalize how it is assembled form the core of the complex, interdependent process underlying public communication and the formation of public opinion. That is why solutions must be found here first. The most important areas that will need action in the foreseeable future are facilitating external research and evaluation, strengthening the diversity of algorithmic processes, anchoring basic social values (e.g. by focusing on professional ethics) and increasing awareness among the public.

A first goal, one that is comparatively easy to achieve, is making users more aware of the processes and mechanisms described here. Studies show that users of social networking platforms do not even know that such sorting algorithms exist, let alone how they work. Educational responses, including in the area of continuing education, would thus be appropriate, along with efforts to increase awareness of disinformation and decrease susceptibility to it, for example through the use of fact-based materials.

Platform operators themselves clearly have more effective possibilities for intervention. For example, they could do more to ensure that values such as appropriateness, responsibility and competency are adhered to when the relevant systems are being designed and developed. A medium-term goal could be defining industry-wide professional ethics for developers of ADM systems.

Moreover, specialists who do not work for platform operators themselves should be in a position to evaluate and research the impact being made by the operators’ decisions. Until now it has been difficult if not impossible for external researchers or government authorities to gain access to the required data, of which operators have vast amounts at their disposal. Neither the design decisions made by platform operators nor the impacts of those decisions on individual users are transparent to any significant degree. Systematic distortions, for example in favor of one political viewpoint or another, are difficult to identify using currently available data. More transparency – through a combination of industry self-regulation and, where necessary, legislative measures – would make it possible to gain an unbiased understanding of the actual social consequences of algorithmic sorting and to identify potential dangers early on. Making it easier to conduct research would also stimulate an objective, solution-oriented debate of the issue and could identify new solutions. Measures like these could also make it easier to design algorithmic systems that increase participation. This would foster a more differentiated view of algorithmic processes. At the same time, it could increase trust in systems designed to benefit all of society.

Write a comment