Discussions about the societal consequences of algorithmic decision-making systems are omnipresent. A growing number of guidelines for the ethical development of so-called artificial intelligence (AI) have been put forward by stakeholders from the private sector, civil society, and the scientific and policymaking spheres. The Bertelsmann Stiftung’s Algo.Rules are among this body of proposals. However, it remains unclear how organizations that develop and deploy AI systems should implement precepts of this kind. In cooperation with the nonprofit VDE standards-setting organization, we are seeking to bridge this gap with a new working paper that demonstrates how AI ethics principles can be put into practice.

With the increasing use of algorithmic decision-making systems (ADM) in all areas of life, the discussions about a “European approach to AI” are becoming more urgent. Political stakeholders on the German and European levels describe this approach using ideas such as “human centered” systems and “trustworthy AI”.” A large number of ethical guidelines for the design of algorithmic systems have been published, with most agreeing on the importance of values such as privacy, justice and transparency.

In April 2019, the Bertelsmann Stiftung published its Algo.Rules, a set of nine principles for the ethical development and use of algorithmic systems. We argued that these criteria should be integrated from the start when developing any system, enabling them to be implemented by design. However, like many initiatives, we are currently facing the challenge of making sure that our principle are actually put into practice.

Making general ethical principles measurable!

In order to take this next step, general ethical principles need to be made measurable. Currently, values such as transparency and justice are understood in many different ways by different people. This leads to uncertainty within the organizations developing AI systems, and impedes the work of oversight bodies and watchdog organizations. The lack of specific and verifiable principles thereby undermines the effectiveness of ethical guidelines.

In response, the Bertelsmann Stiftung has created the interdisciplinary AI Ethics Impact Group in cooperation with the nonprofit VDE standards-setting organization. With our joint working paper “AI Ethics: From Principles to Practice – An Interdisciplinary Framework to Operationalize AI Ethics,” we seek to bridge this current gap by explaining how AI ethics principles could be operationalized and put into practice on a European scale. The AI Ethics Impact Group includes experts from a broad range of fields, including computer science, philosophy, technology impact assessment, physics, engineering and the social sciences. The working paper was co-authored by scientists from the Algorithmic Accountability Lab of the TU Kaiserslautern, the High-Performance Computing Center Stuttgart (HLRS), the Institute of Technology Assessment and Systems Analysis (ITAS) in Karlsruhe, the Institute for Philosophy of the Technical University Darmstadt, the International Center for Ethics in the Sciences and Humanities (IZEW) at the University of Tübingen, and the Thinktank iRights.Lab, among other institutions.

A proposal for an AI ethics label

At its core, our working paper proposes the creation of an ethics label for AI systems. In a manner similar to the European Union’s energy-efficiency label for household appliances, such a label could be used by AI developers to communicate the quality of their products. For consumers and organizations planning to use AI, such a label could enhance comparability between available products, allowing quick assessments to be made as to whether a certain system fulfilled the necessary ethical requirements for a given application. Through these mechanisms, the approach could incentivize the ethical development of AI beyond the requirements currently enshrined in law. Based on a meta-analysis of more than 100 AI ethics guidelines, the working paper proposes that transparency, accountability, privacy, justice, reliability and environmental sustainability be established as the six key values receiving ratings under the label system.

The proposed label would not be a simple yes/no seal of quality. Rather, it would provide nuanced ratings of the AI system’s relevant criteria, as illustrated in the graphic below.

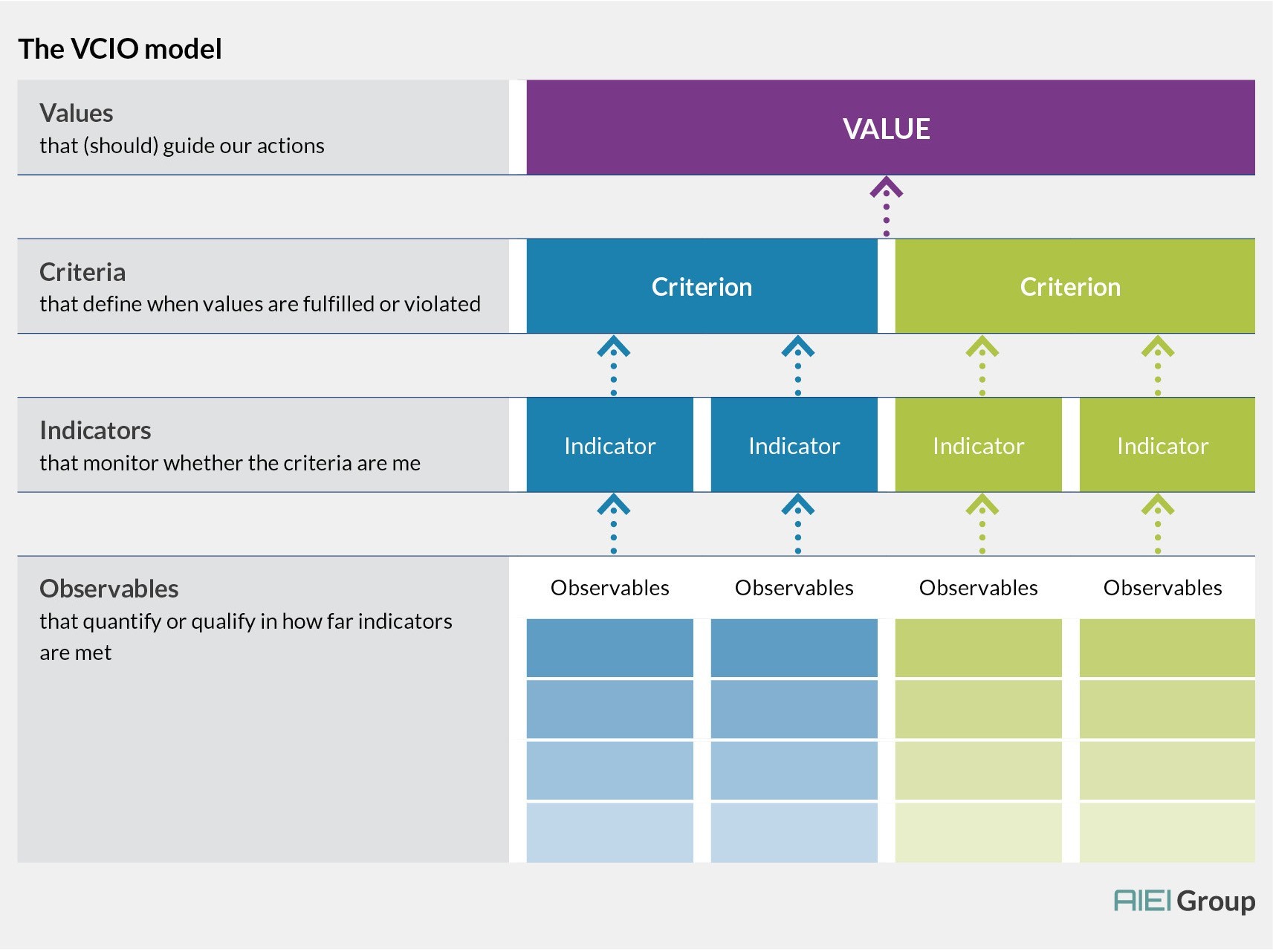

The VCIO model concretizes general values

The so-called VCIO model (referring to values, criteria, indicators and observables) can be used to define the requirements needed to achieve a certain rating. As the scientific basis for the AI Ethics Impact Group’s proposal, the model can help concretize general values by breaking them down into criteria, indicators and measurable observables. This in turn allows their implementation to be measured and evaluated. The paper describes how the model might function for the values of transparency, accountability and justice.

Functioning of the VCIO model and its different layers – values, criteria, indicators and observables.

The ultimate impact of any algorithmic system will necessarily be influenced both by its technical features and the way the technology is organizationally embedded. Therefore, requirements should be defined that relate both to the technical system itself (system standards) and to the processes associated with its development and use (process standards). For the value of transparency, for example, requirements could include: 1) use of the simplest and most intelligible algorithmic system, taking into account the issues of efficiency and accuracy; 2) provision of information to all affected parties regarding the AI system’s use; and 2) provision of information that is sufficiently oriented toward the needs of the target group.

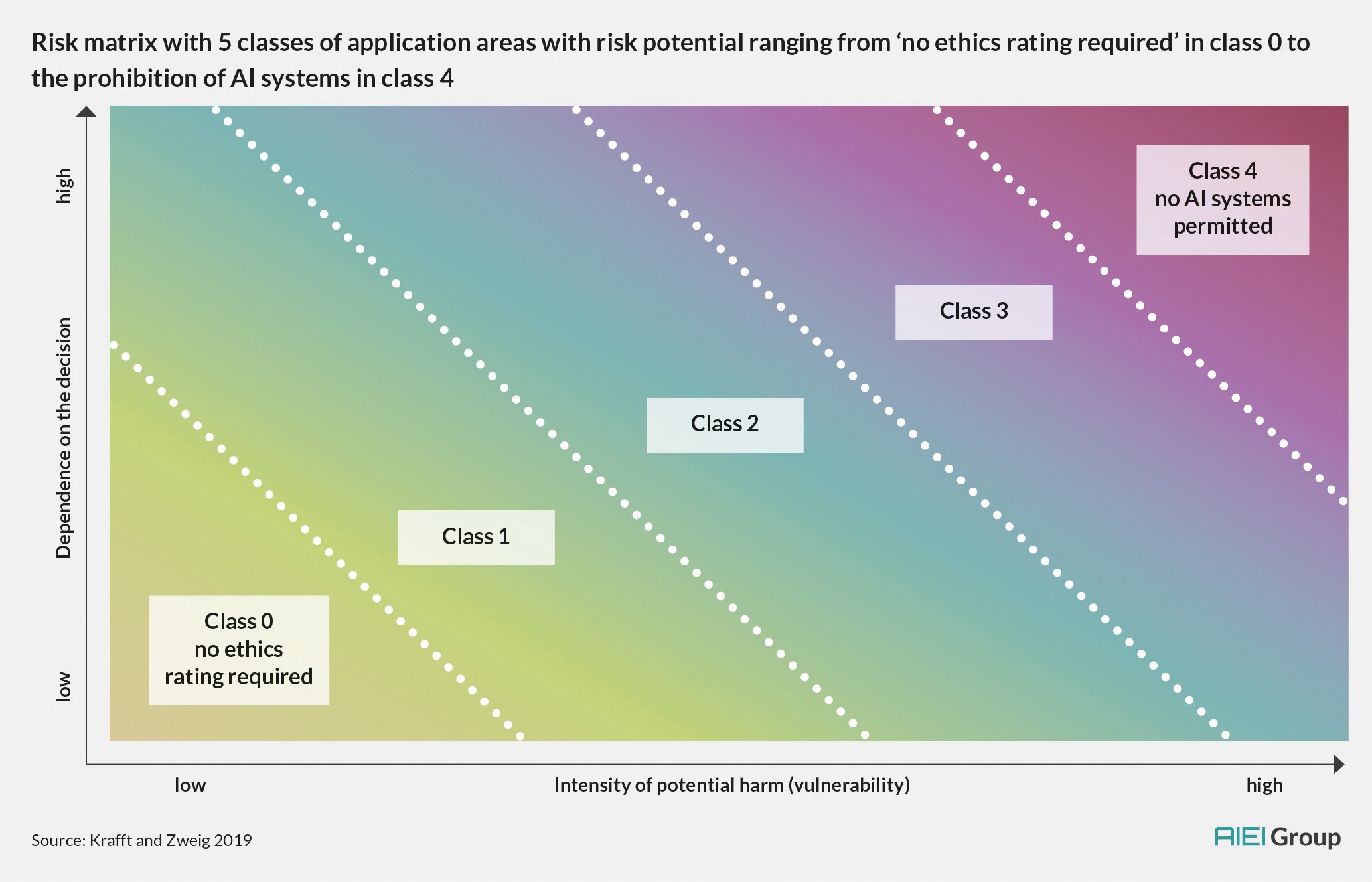

The risk matrix helps classify AI application scenarios

The decision as to what levels on the ethics-label should be considered ethically acceptable would necessarily vary across application cases. For example, the use of an AI system in an industrial process might be subject to lower transparency requirements than if the same system were applied in a medical procedure requiring the processing of personal data.

To help in such decisions, the working paper proposes the use of a risk matrix to guide the classification of different application cases. Instead of a binary classification into high-risk and non-high-risk cases (as outlined in the European Commission’s White Paper On Artificial Intelligence), our risk matrix uses a two-dimensional approach that does more justice to the diversity of application cases. The horizontal x-axis represents the intensity of potential harm. The vertical y-axis represents the dependence on the decision of (the) person(s) affected. The assessment of the intensity of potential harm should reflect any potential impact on fundamental rights, the number of people affected and any risks for society as a whole, for instance if democratic processes are affected. The degree of dependence on the outcome of the decision reflects issues such as an affected individual’s ability to avoid exposure, switch to another system or challenge a decision.

We further recommend a division into five different classes of risk (see diagram). Systems that do not require any regulation whatsoever would fall into class 0. The class reflecting the greatest amount of risk, in this case class 4, would be used to describe situations in which AI systems should not be applied at all, due to the high level of associated risk. For application cases falling between these two extremes, system requirements would need to be defined through use of the VCIO model.

Illustration of the two-dimensional risk-classification approach, along with the proposed division into five risk classes (numbered as 0 – 4).

This systematic approach could be adopted by policymakers and oversight bodies as a means of concretizing requirements for AI systems and ensuring effective control. Organizations intending to implement AI systems in their working processes could use the model to define requirements for their purchasing and procurement processes.

A toolset for further work on AI ethics

The solutions presented in the working paper – from the ethics label to the VCIO model and the risk matrix – have yet to be tested in practice, and need to be developed further. However, we believe that the proposals may help to advance the much-needed debate on how AI ethical principles can be put effectively into practice. Policymakers, regulators and standards-setting organizations should regard the working paper as a toolset to be further developed and reflected upon in an interdisciplinary and participatory manner.

We look forward to continuing the conversation!

This text is licensed under a Creative Commons Attribution 4.0 International License

Write a comment